0 -1 0 -1 -1 -1 -1 4 -1 or -1 8 -1 0 -1 0 -1 -1 -1 I forget which, it was a *long* time ago, think it was in the "Orange Book" OpenGL Shading Language 2nd ed

51 / 55

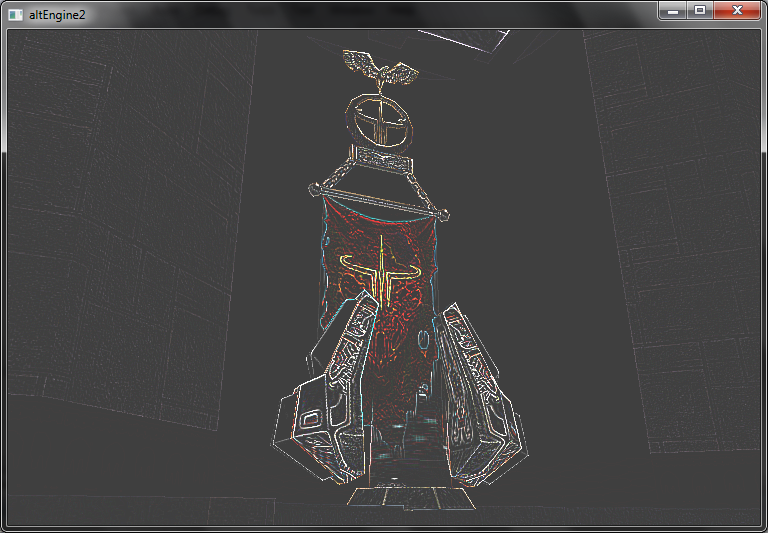

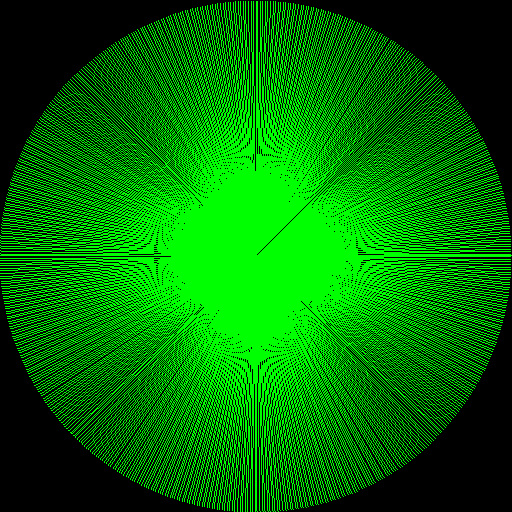

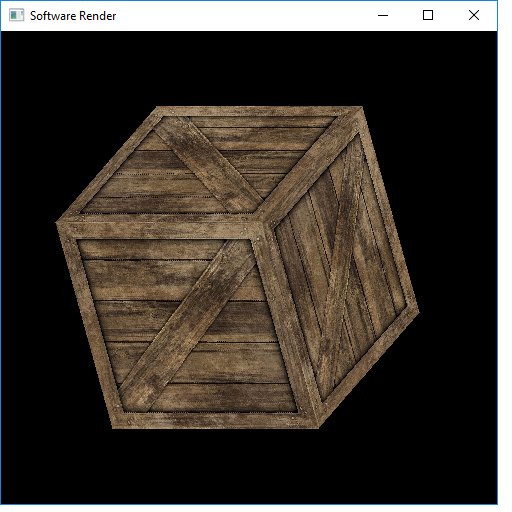

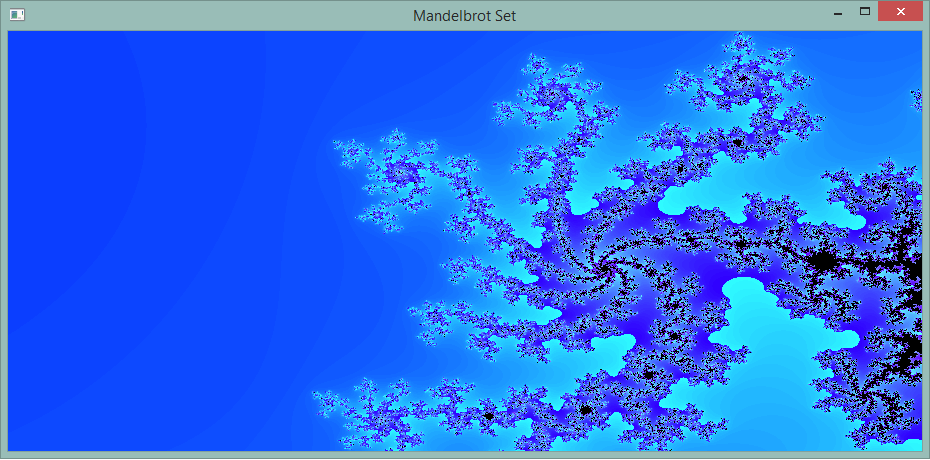

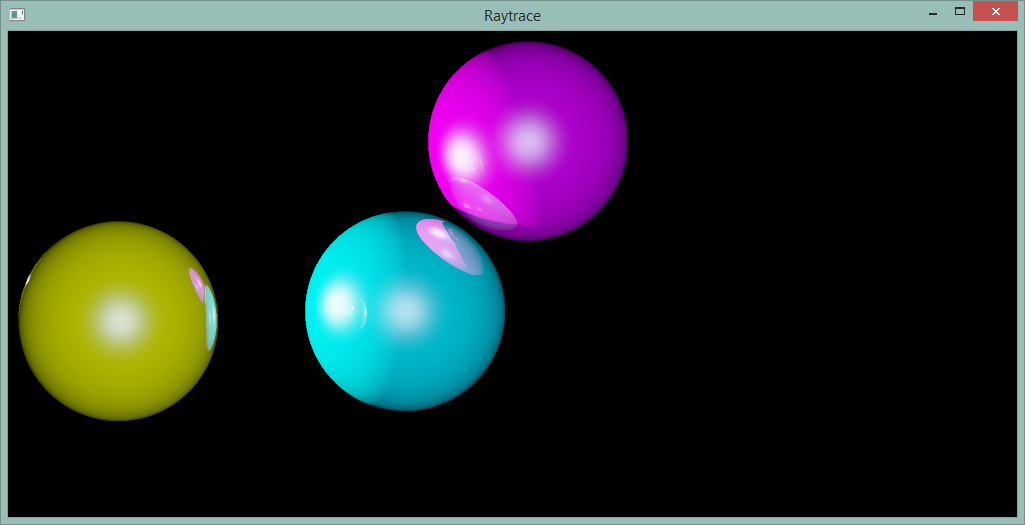

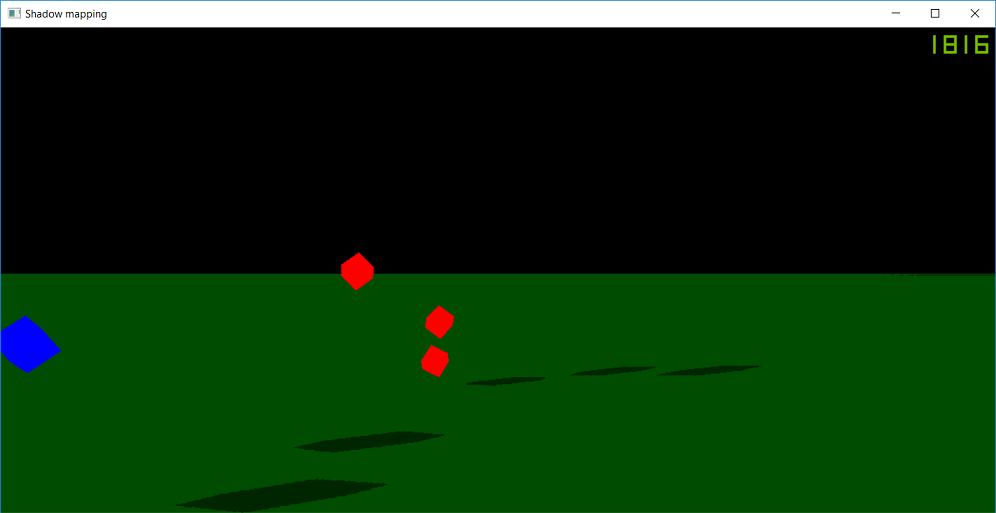

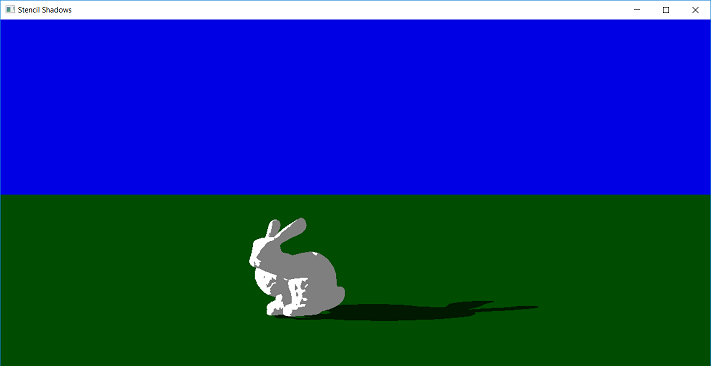

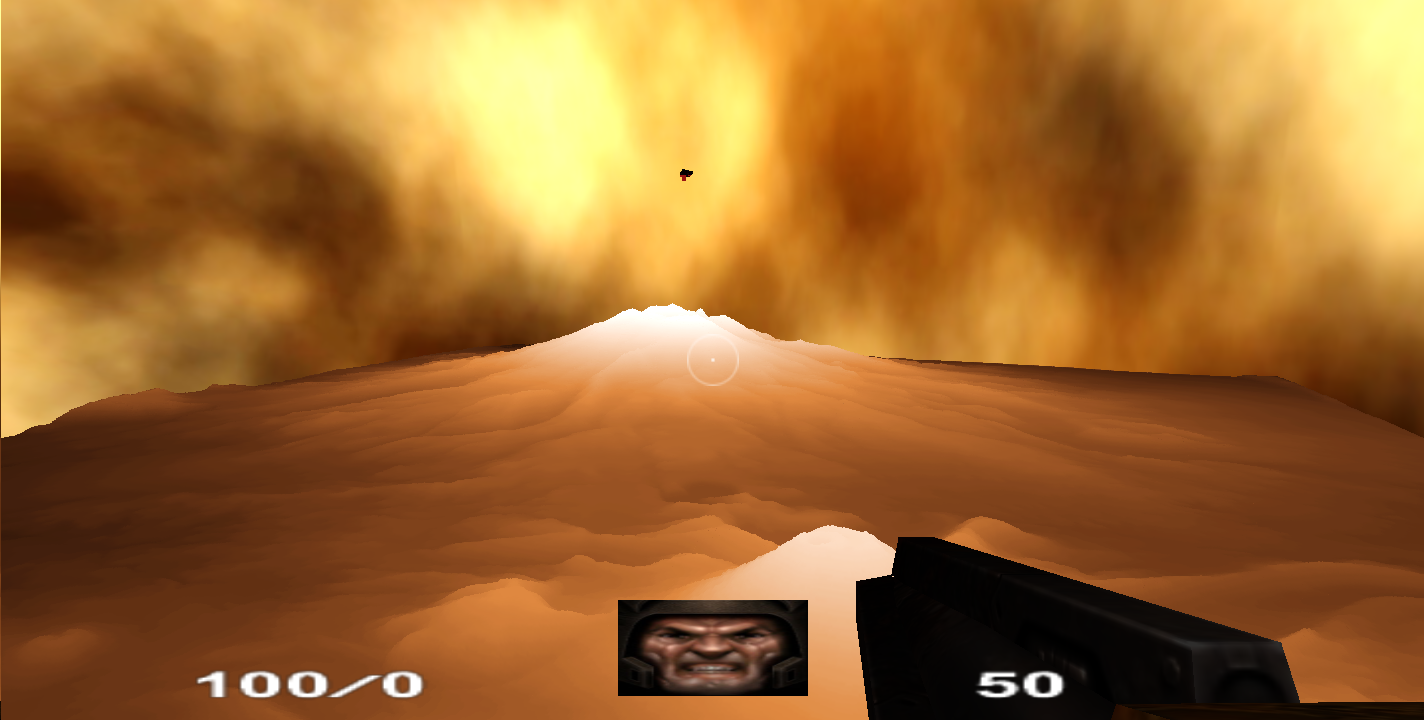

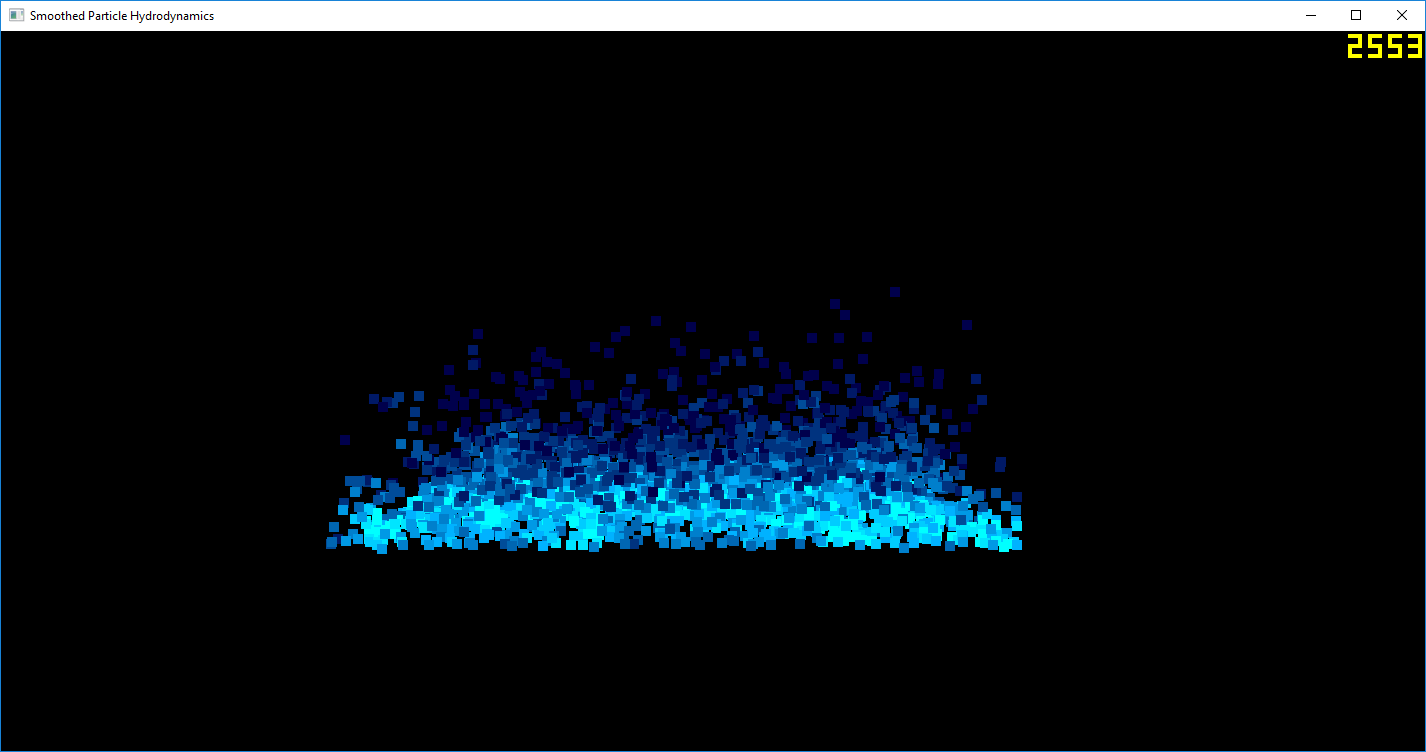

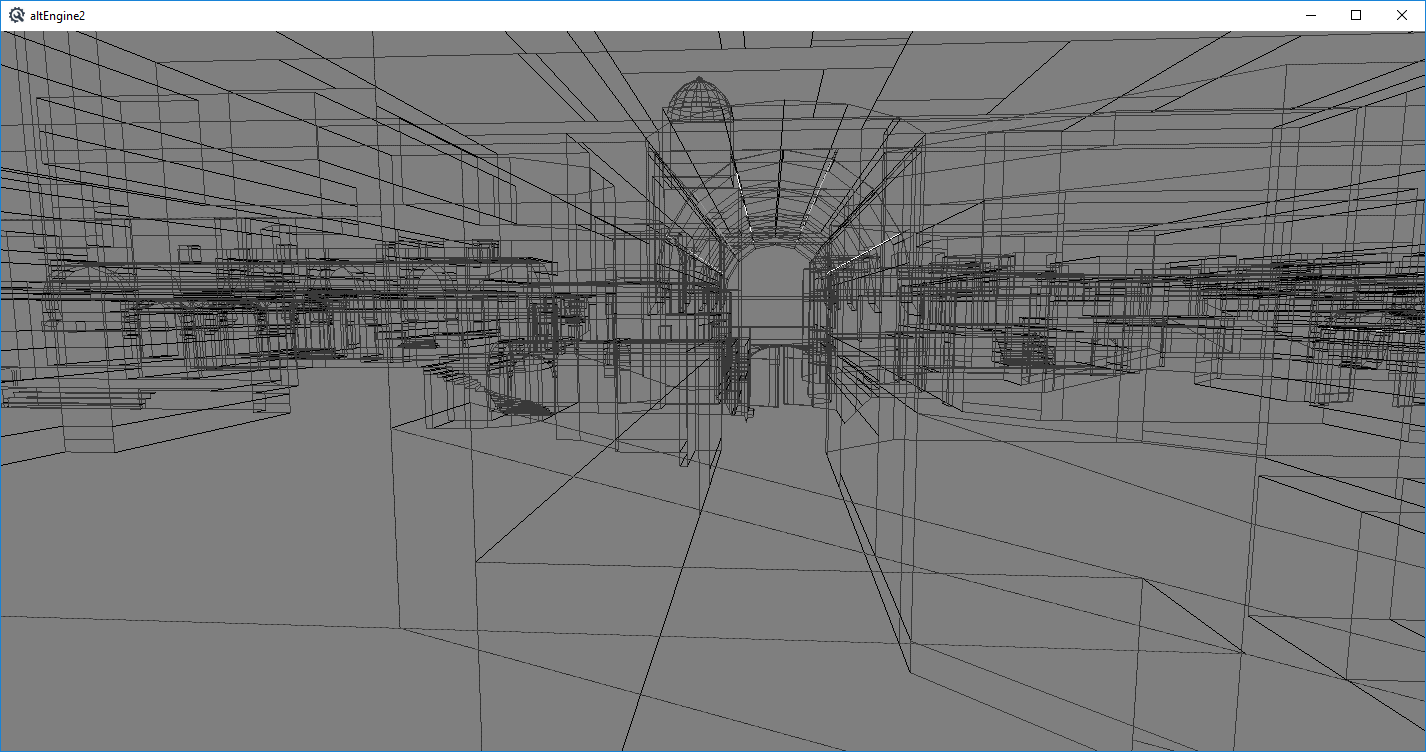

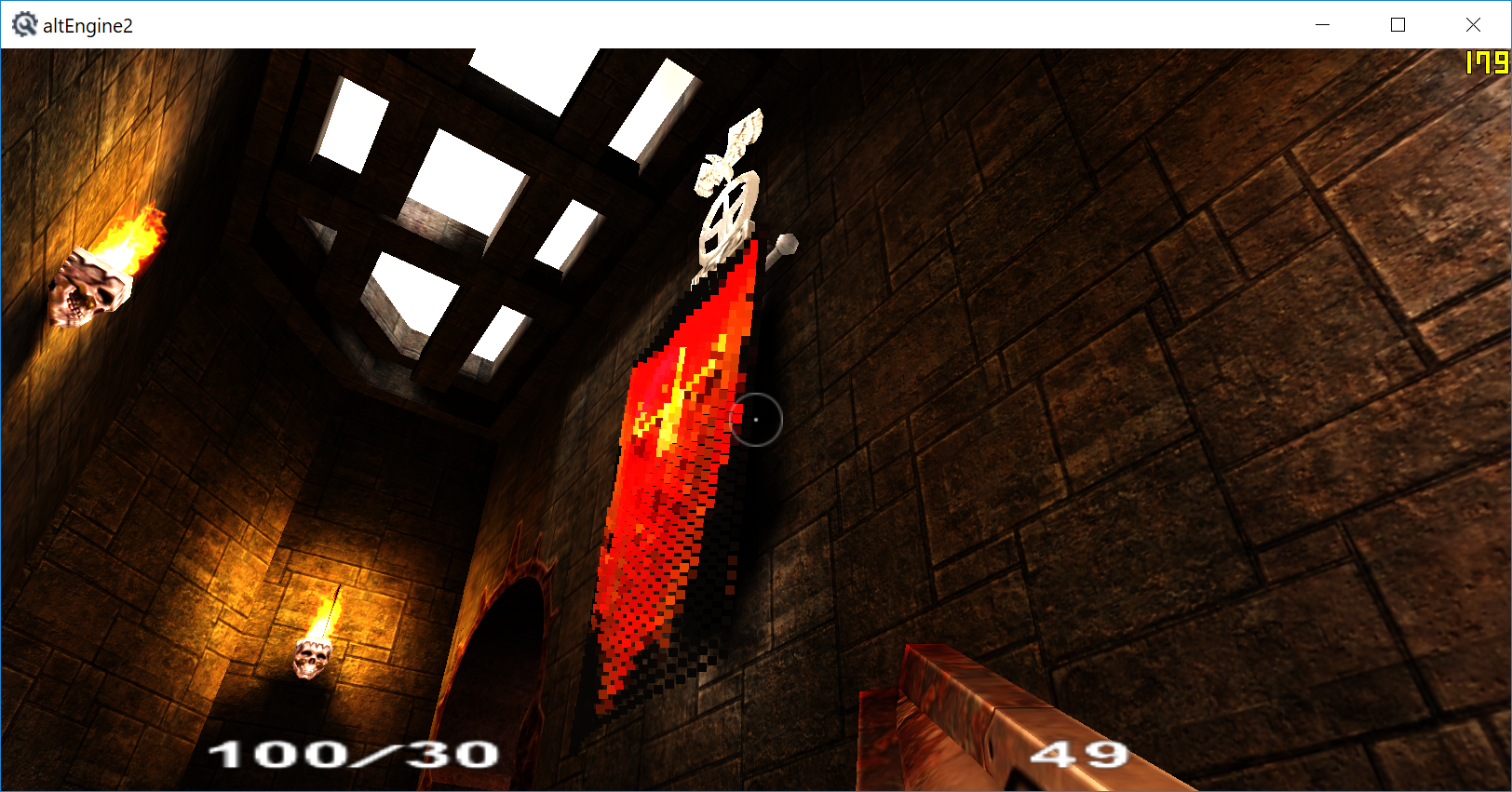

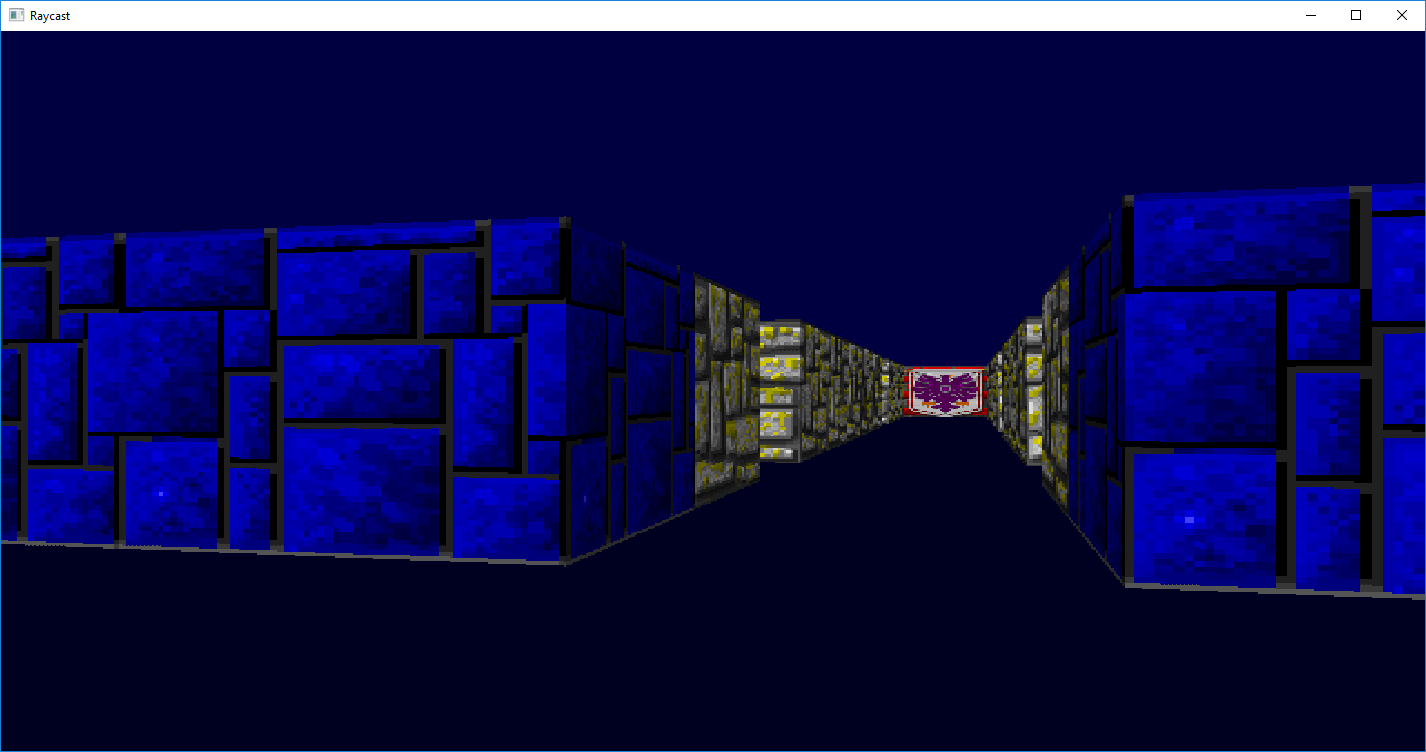

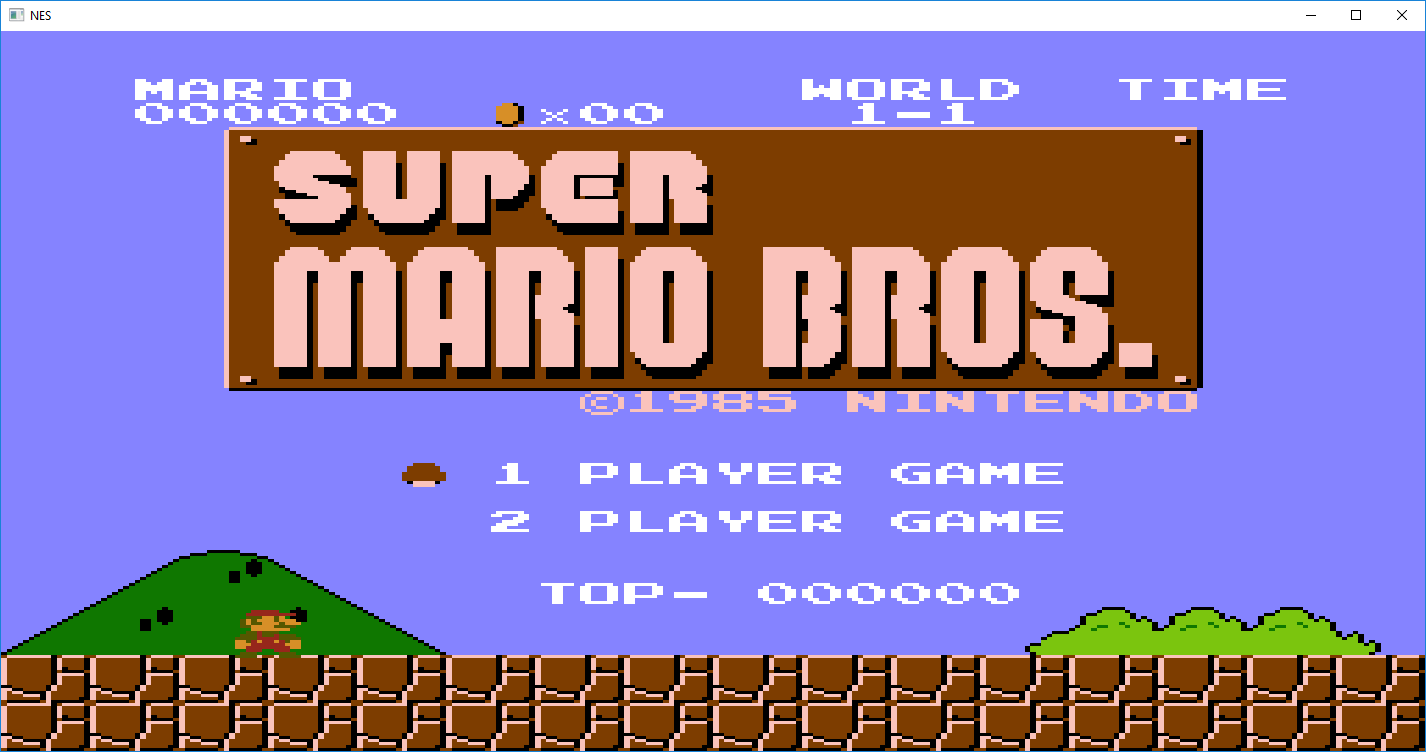

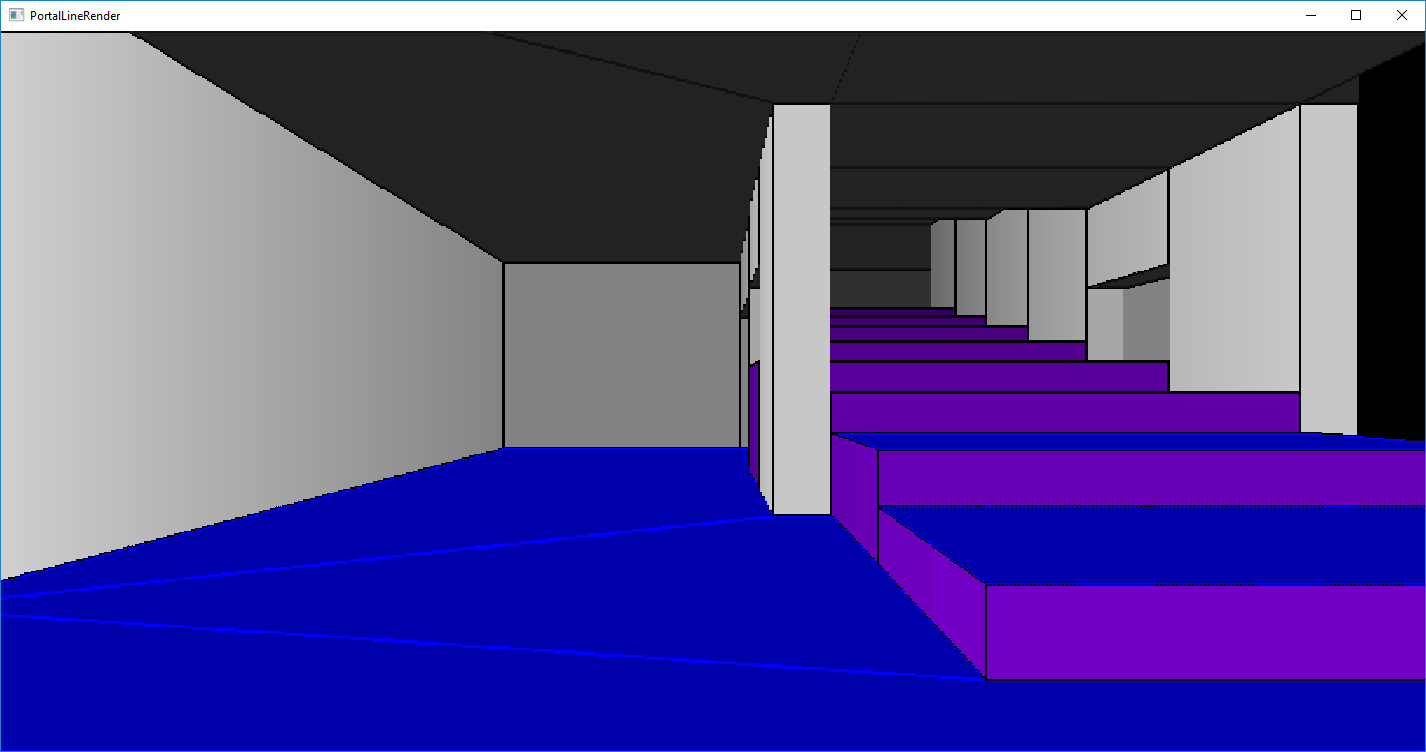

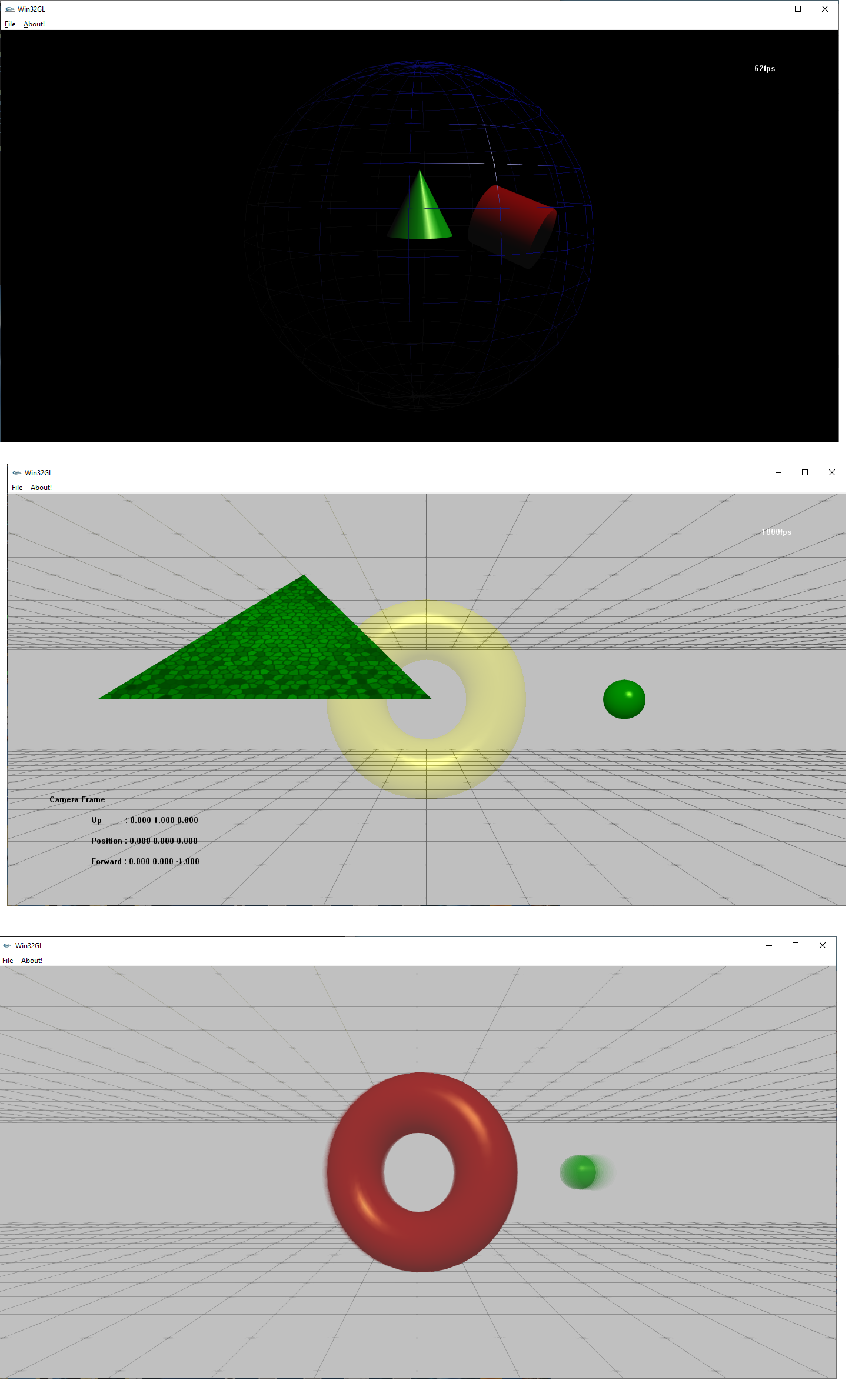

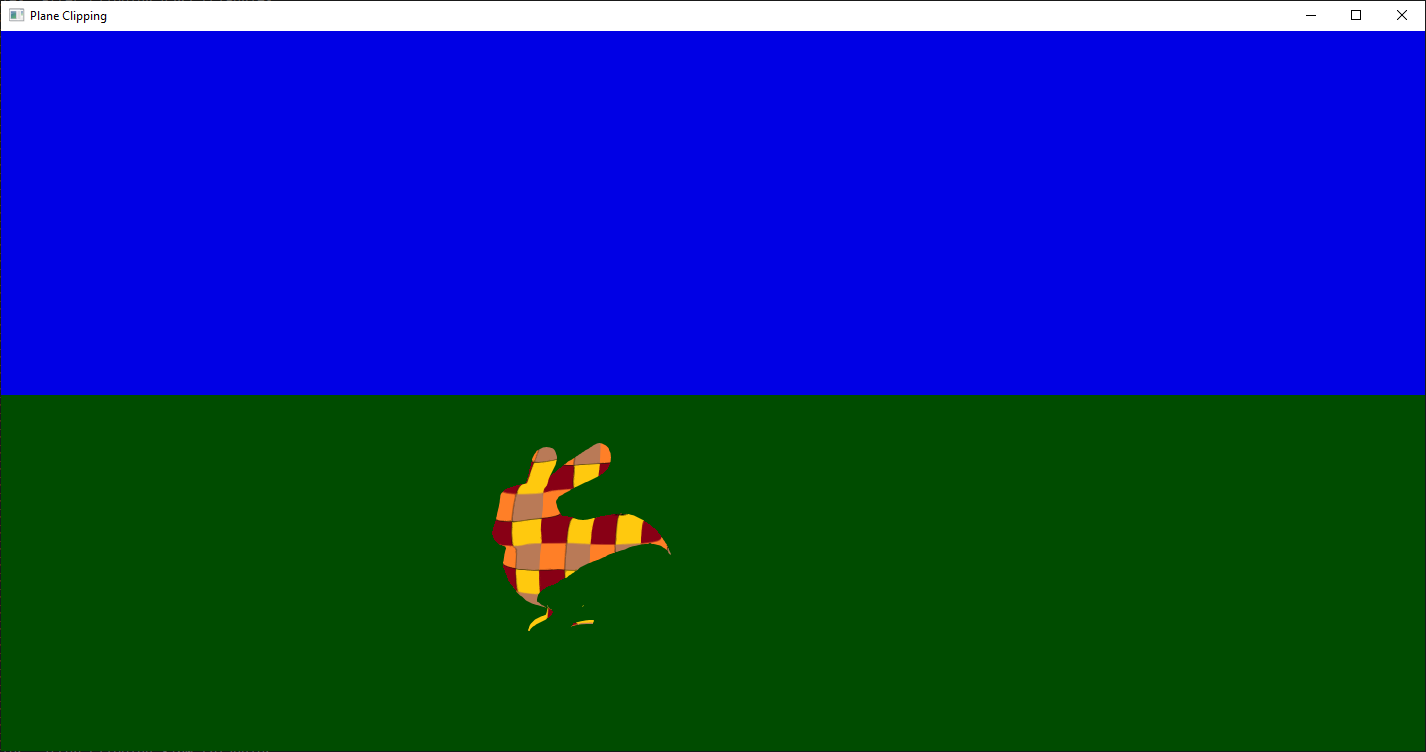

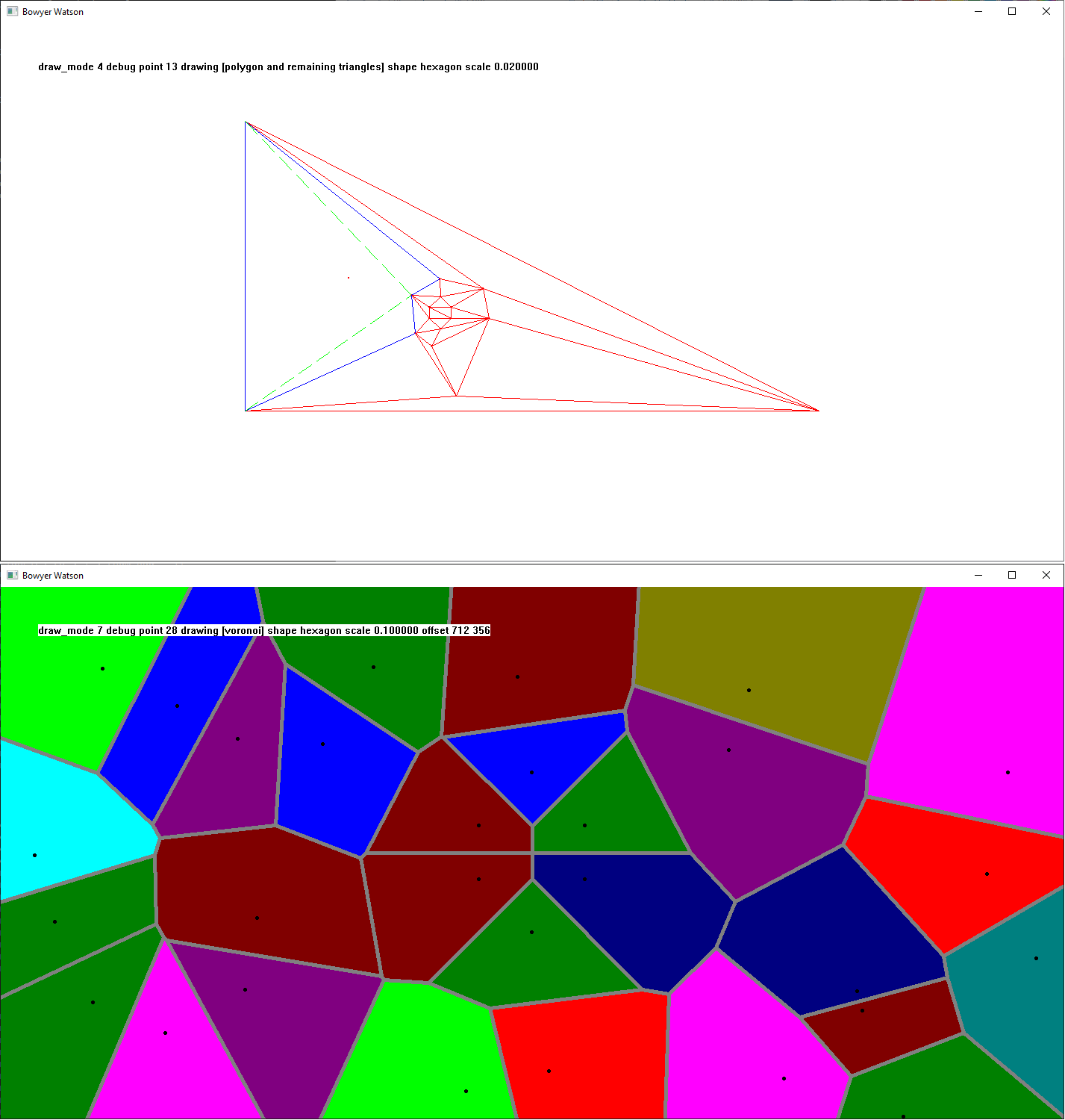

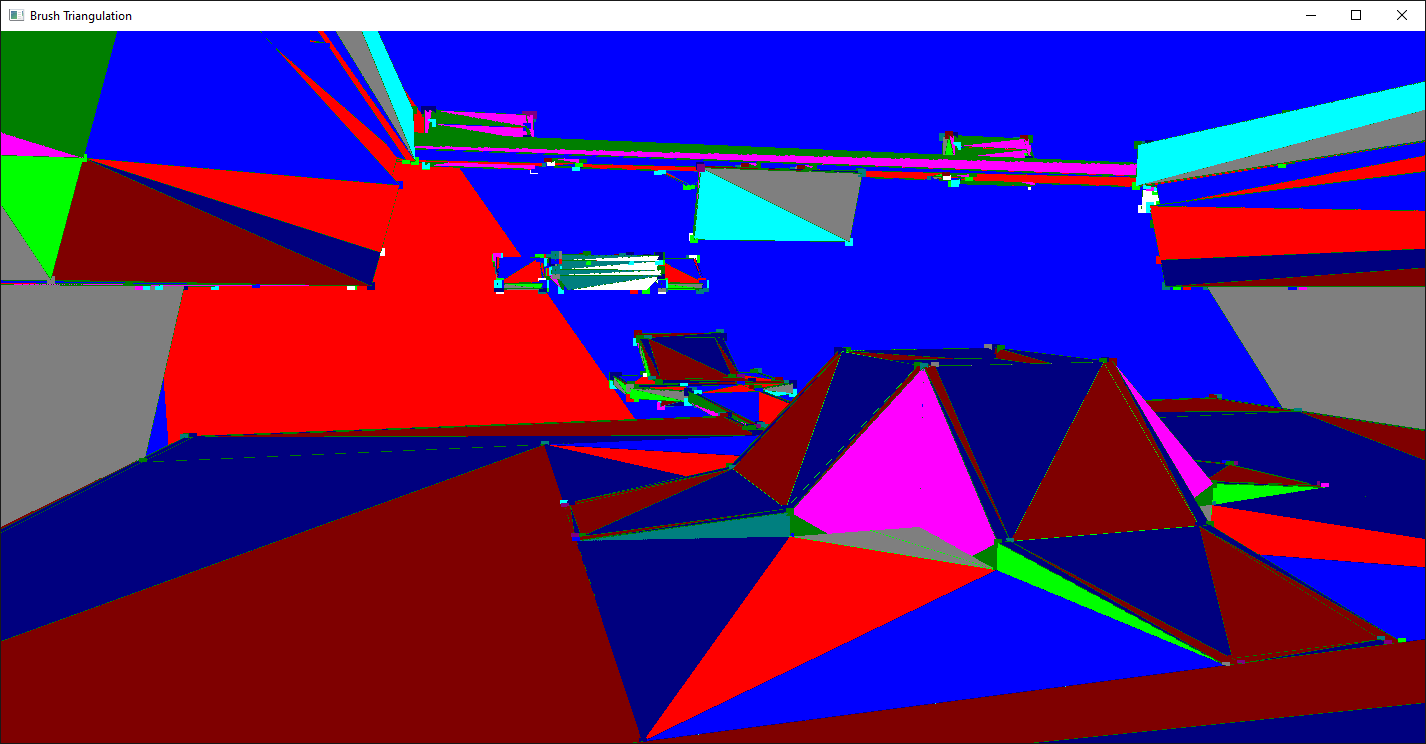

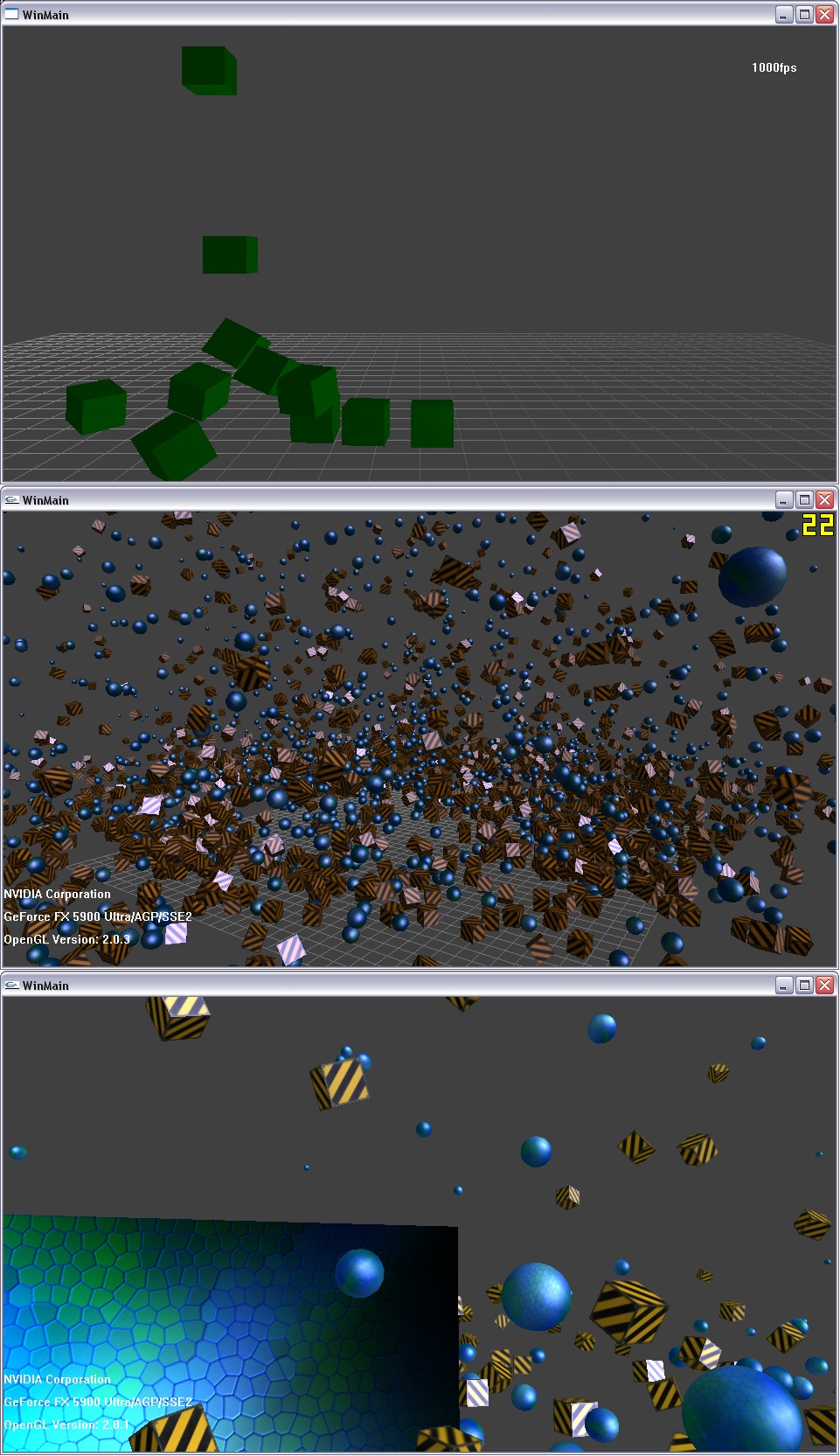

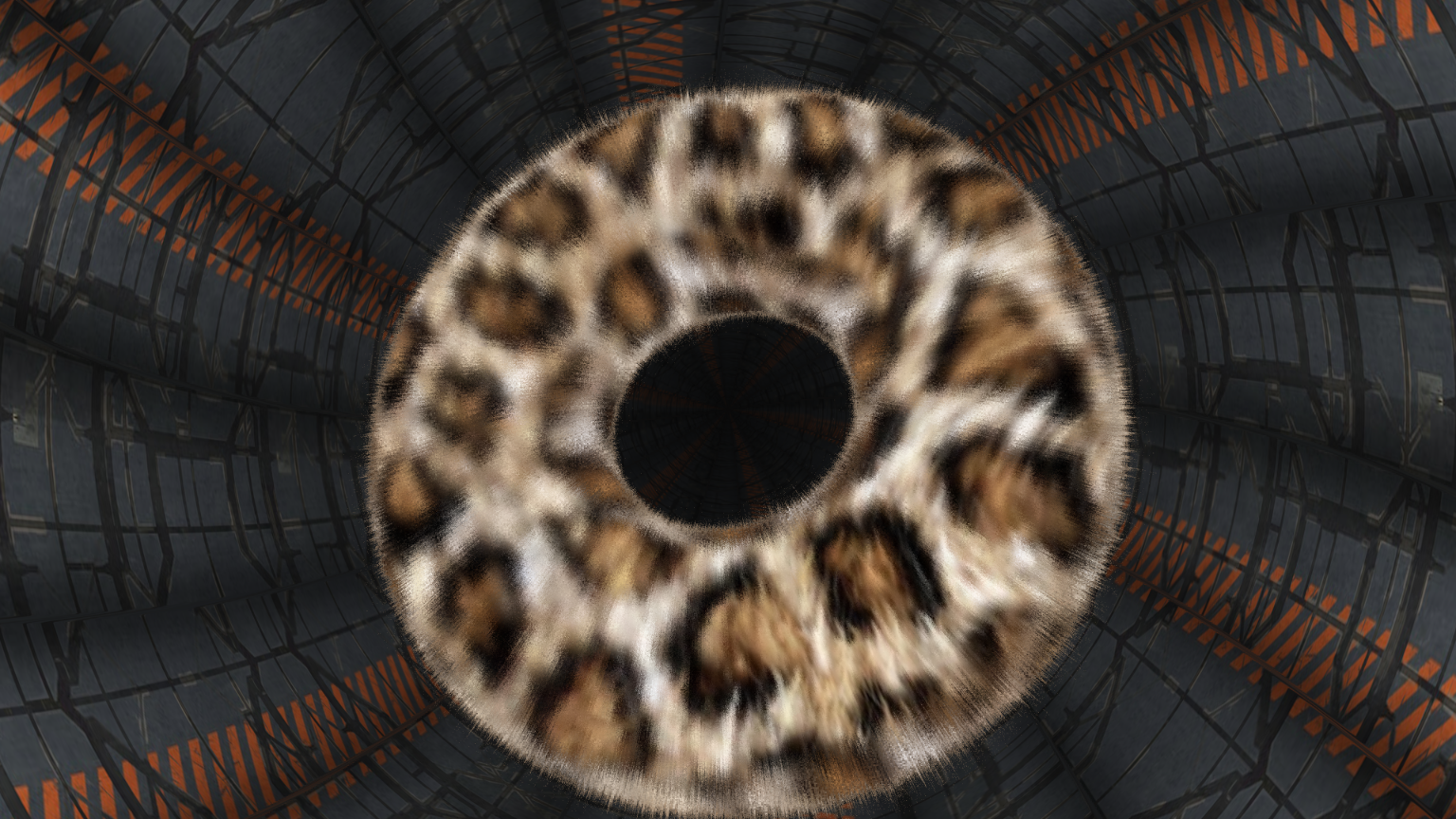

So, due to last minute scheduling issues I had to switch from an AI course to a computer graphics course. Which should be easy for me. Problem is, everything is in WebGL and javascript in this course. No big deal really, javascript is a bit different while OpenGL is still the same really. You can see a bunch of my assignments here in the CG directory. So, around the same time I stumbled into "Ray Marching", which uses the fragment shader to do ray tracing like rendering using signed distance functions. A sign distance function is essentially a function that when you give it a position it tells you how far away you are from something. So instead of calculating intersections against triangles, spheres, and boxes like in ray tracing, you have a function that gives you a distance and wait for it to approach zero. See this for a good introduction or maybe this one. Anyway, it's not too much trouble to connect shadertoy style shaders (where almost everything is ray marched) to webgl, or really even any application as you just need a few extra functions in your fragment shader and a few uniforms for time and resolution and such. This is a good reference for connecting shadertoy to webgl, but applies to real applications as well. The image is from a furmark style donut, that uses ray marching. Although there are tons of cool effects, the planet by reinder is very cool and combines multiple shadertoy entries. Reinder uses the Atmospheric Scattering by GLtracy in his demo which might even look better than O'Neils GPU Gems scattering, albeit they are probably both using the same code. Oh yeah, if you are interested in ray marching shapes other than cubes and spheres, check out Inigo's Primitives page which has quite a few different shapes (where each shape is defined by a different signed distance function, no vertices), the source code is also filled with interesting links for you to check out.

52 / 55

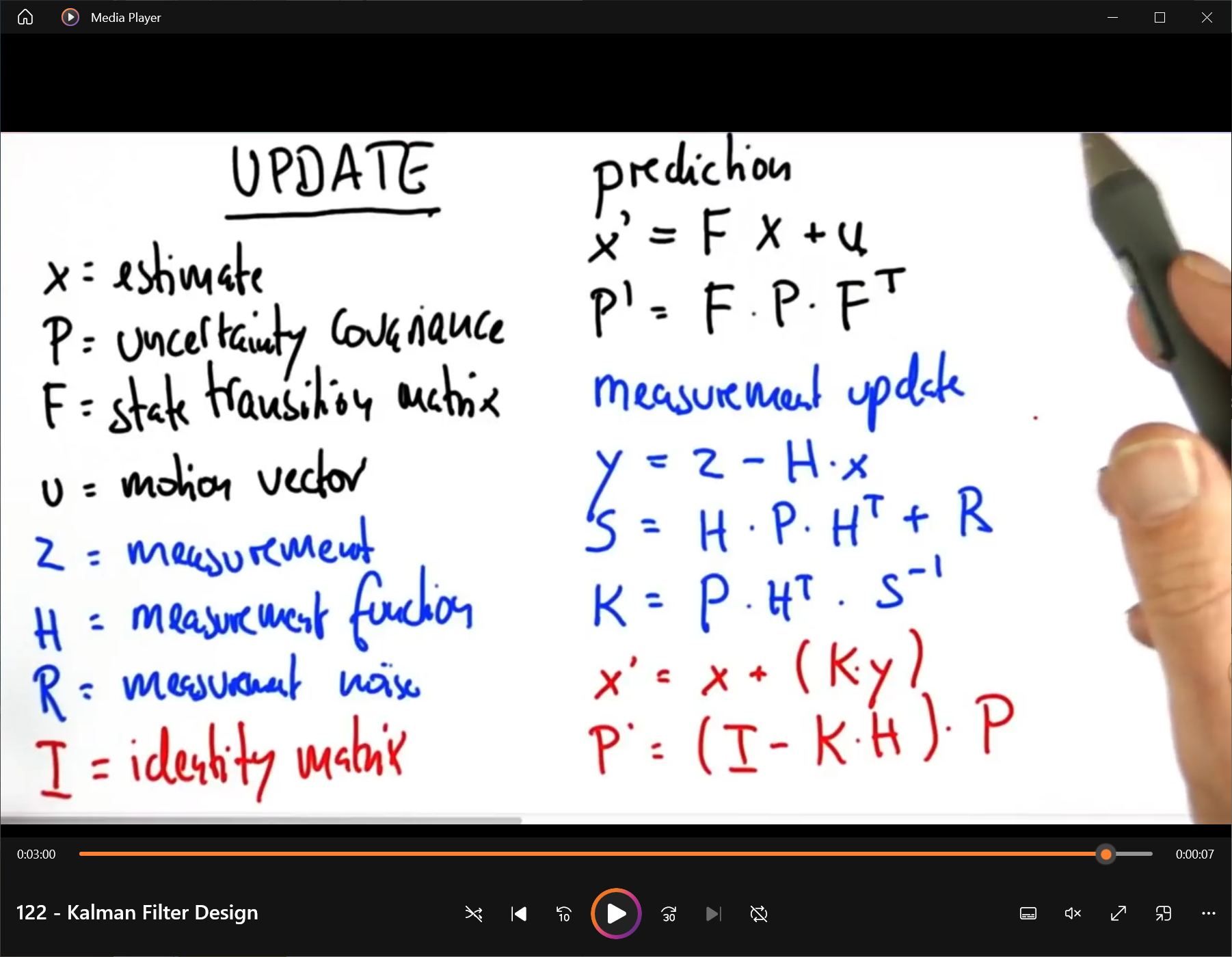

So, this is a screenshot of probably the most import part of a series from Dr. Sebastian Thurn on Kalman Filters from the OMSCS course. Hopefully they dont get too mad about a single screenshot, I had typed the same thing up in Latex, but liked the colors of the slide more to be honest. Anyway, Kalman filters are a bit nebulous because I find a lot of explanations of them aren't really correct. Lot's of people repeating what they've been told and losing information along the way (similar to how NeuralNetworks are often explained nowadays). Anyway, I was going to attempt to put a more detailed explanation here, but it really needs to be in a paper, so I threw the following together so far, [kalman.pdf] will likely update it over time. And it is pretty highly specific with tracking particle motion using kinematic equations. I do have Python code that implements the Kalman filter on a two dimensional tracking problem we used in the course using multiple methods (two one dimensional passes, a single two dimensional pass, and both variants of 3d tracking, but with Z terms set to zero as it is a two dimensional problem.) Anyway, Kalman filtering takes a measured state at time T and predicts the state at time T + 1 and is useful for a lot of applications. You can implement a Kalman filter using the above linear equations and modify the F "State Transition" matrix to track any number of variables. But in most explanations of the Kalman filter people don't really provide the whole picture and leave things in terms of X(t+1) = something*X(t) -- which is no where near enough information. Even Dr Thurn's explanation in the course focuses more on concepts and less on application details, this slide being a kind of "dont worry about understanding this" type of thing. But his course was originally targeted for Udacity and not really graduate students, so I think the explanation was tailored to make Udacity people feel smart while simultaneously not really challenging them mathematically.

I'll probably come back here and add an example using C++ of the various flavors I provided in the pdf file, but not sure I want to go to the trouble of generating a testing framework around it like the asteroid jumping example they provide in class. I would post the python code, but they use the same code every semester and have some policies against posting your solution implementation anywhere online to prevent people copy pasting solutions.

-- Also, you have to question if I'm being another one of those people I talked about providing second hand explanations ;o)

I'll probably come back here and add an example using C++ of the various flavors I provided in the pdf file, but not sure I want to go to the trouble of generating a testing framework around it like the asteroid jumping example they provide in class. I would post the python code, but they use the same code every semester and have some policies against posting your solution implementation anywhere online to prevent people copy pasting solutions.

-- Also, you have to question if I'm being another one of those people I talked about providing second hand explanations ;o)

53 / 55

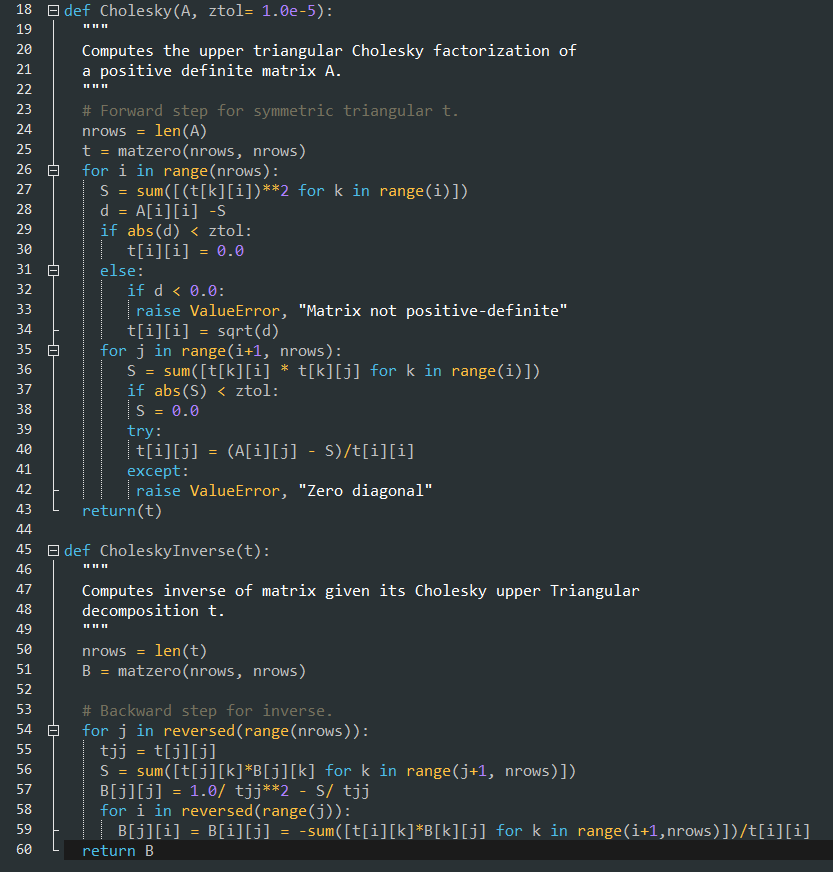

So, also related to the same class before, they had this python matrix class that had an inverse function. Typically when I would invert a matrix I would do the adjoint divided by the determinant, where the adjoint is the cofactor matrix. But this class used something called a Cholesky decomposition, which I have not heard of before. And I figured maybe other people haven't as well. Anyway, they pulled this code from a blog post by "Ernesto P. Adorio, Ph.D." And from the comments it looks like he got it from a numerical analysis book "The Cholesky code was based on some numerical analysis book most probably by Cheney or by Budden and Faires." In my Numerical Analysis course we used a book from Timothy Sauer and I dont believe that was mentioned, but it was a good course and book in my opinion. Anyway, here is a wayback machine link to the blog post. I'll do a beyond compare to what the latest version the course uses in a moment just as a sanity check for bugs from the original as well as make a C++ version of it just so I have multiple ways of calculating an inverse. But I figured I'd bring some attention to this alternative method of computing a matrix inverse. cholesky.py -- And since we are talking about numerical analysis I'll link my pollard rho factoring code here factor.c which can factor a prime number of any length (albeit it will take a long time) which uses the gnump library which if you understand encryption you know that it relies on factoring prime numbers being computationally expensive. Also some windows binaries, think these were intel specific and used mingw, but hopefully they still run. factor.zip

54 / 55

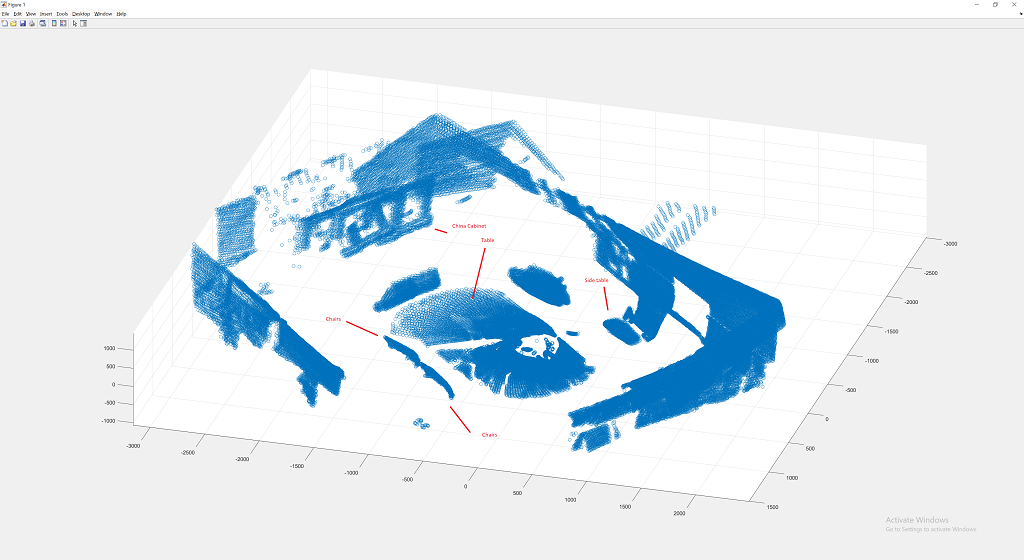

So, this is a 3d point cloud generated from a "Slamtec RPLIDAR A1" lidar scanner that I got from adafruit, we had "hardware challenges" to do as part of the RAIT/AI4R course at georgia tech,

and I thought it would be interesting to attempt to get a 3d scan from a 2D lidar mounted at 45 degrees. So essentially a small stepper motor (Mercury Motor SM-42BYG011-25) spun the lidar scanner using an Allegro A4988, and each step it collected a 2d scan.

A little data processing and you can extract a 3d point cloud. One challenge was that the cable from the scanner was causing a bit of drag on the stepper motor resulting in an incorrect rotation

(should be 1.8 degrees per step), so to fix that I mounted the laptop on a little camping table, and added a heavy C-Clamp to hold the stepper motor. The setup was pretty improvised using hot glue,

breadboards, 9v batteries, and an arduino. But the results were not too bad I think. Files are here, but not much explanation to them, but nothing too complex going on really. 3dLidar.zip

and I thought it would be interesting to attempt to get a 3d scan from a 2D lidar mounted at 45 degrees. So essentially a small stepper motor (Mercury Motor SM-42BYG011-25) spun the lidar scanner using an Allegro A4988, and each step it collected a 2d scan.

A little data processing and you can extract a 3d point cloud. One challenge was that the cable from the scanner was causing a bit of drag on the stepper motor resulting in an incorrect rotation

(should be 1.8 degrees per step), so to fix that I mounted the laptop on a little camping table, and added a heavy C-Clamp to hold the stepper motor. The setup was pretty improvised using hot glue,

breadboards, 9v batteries, and an arduino. But the results were not too bad I think. Files are here, but not much explanation to them, but nothing too complex going on really. 3dLidar.zip

55 / 55

"

Alright, so this image is from a project that I did for a machine learning course that I took. (image is essentially just YOLOv11) We only had a few weeks, so I just opted to use existing models. I was converting an image to a 3d point cloud for robotics essentially. Say you have a robot arm, and you want to pick something up, you need to know where you are in space pretty well as well as where in space the object you are picking up is. So, I used YOLOV11 for object segmentation and DepthAnythingV2 for depth estimation. From a regular image, you can get the object class detections from YOLO as well as the object masks. With DepthAnythingV2 you get the depth of the scene, which when combined with the object mask gives you a width, height, and depth. Take the min/max of each coordinate axis and you get a 3d AABB.

Typically, when I started learning about Machine Learning, I always thought you take your input features and map them to a few output classes, and I knew that you can input features as an image (essentially all the pixels), but haven't really seen images as outputs before. Which is essentially what R-CNN and later YOLO output. (YOLO being a performance optimization allowing real time object detection)

Converting depth to Z distance is usually Z = 1 / depth, but DepthAnythingV2 has a "metric" model (which is better in every way) that gives you Z = Depth. Note that the metric model has seperate training weights for indoor vs outdoor scenes. Also note that the depth generated isn't perfect and doesn't hold up to rotation very well.

But, while working on this project I had my mind blown twice, which is why I wanted to get this online to spread the knowledge a bit there. First, by Gaussian Splatting. Which is fast photorealistic point clouds essentially. So, you go to a location, take some photogrammetry style photos (spin around something and take photos from multiple angles short distances apart) Generate Gaussian splats (check out something like PolyCam's webpage, they do photogrammetry and have an online gaussian splat generation tool) And then you have a 3d representation of the real world scene that renders at 100+ FPS and looks photorealistic. The biggest downside is the static nature of the scene and I assume difficulty animating similar to voxels. The geometry is composed of 3d ellipsoids (3d gaussians) that also have an alpha blending value. But essentially, you render until you saturate your opacity for the pixel traveling through the gaussian splats from your view point for each pixel. Kind of like ray tracing and volumetric rendering. -- Essentially I stumbled on to Nvidia's NeRF first, which was neat. Also, COLMAP, which they use to generate camera poses from multiple images (structure from motion) is pretty impressive. (NeRF and Gaussian Splatting both use COLMAP to generate the data from images)

But, as I was looking into Gaussian Splatting I was wondering how they took 3d point clouds from COLMAP, made gaussians, and then "corrected" the gaussians using back propagation. That's when I stumbled into differentiable rendering.

Differentiable rendering / Inverse Rendering is essentially software rendering with the property that you can back propagate due to the entire process being invertible and differentiable. So, you know machine learning corrects the weights of a model by using back propagation on the loss function (usually mean squared error or log loss cross entropy) stepping the weights in the negative gradient direction that corrects them the most, using the predicted outputs and intended outputs.

Differentiable rendering does the same, except you have a target image and can correct primitives to that target image. Say you have a bunch of random triangles and a picture of a car from multiple angles. You can correct the positions, colors, and orientation of those triangles to best match the car from all angles. Not sure if that's the best explanation, but it's pretty amazing. If you think about rendering the biggest issue regarding differentiability is the rasterization of triangles to pixels. You fix that then essentially there is something called autodiff, which is a process of keeping track of differentiated equivalents of your original functions as you write code. Which is then used during back propagation in the "backwards" step after the initial forward pass. I don't want to get into the weeds too much, but essentially you can back propagate from a 2d image back to 3d primitives.

Anyway, here are some links about all these things:

YOLO / DepthAnythingV2 Project Page

YOLO / DepthAnythingV2 Project Video

Original PjReddie YOLO page

Ultralytics YOLOv11 Page

DepthAnythingV2 Page

Gaussian Splatting Paper Github

Nvidia NeRF blog page

COLMAP

TinyDiffRast - Differentiable Rendering

Differentiable Rendering interactive example using ellipses

Gaussian Splatting Talk

Robot Arm using Isacc Sim

Nvidia Groot page

Typically, when I started learning about Machine Learning, I always thought you take your input features and map them to a few output classes, and I knew that you can input features as an image (essentially all the pixels), but haven't really seen images as outputs before. Which is essentially what R-CNN and later YOLO output. (YOLO being a performance optimization allowing real time object detection)

Converting depth to Z distance is usually Z = 1 / depth, but DepthAnythingV2 has a "metric" model (which is better in every way) that gives you Z = Depth. Note that the metric model has seperate training weights for indoor vs outdoor scenes. Also note that the depth generated isn't perfect and doesn't hold up to rotation very well.

But, while working on this project I had my mind blown twice, which is why I wanted to get this online to spread the knowledge a bit there. First, by Gaussian Splatting. Which is fast photorealistic point clouds essentially. So, you go to a location, take some photogrammetry style photos (spin around something and take photos from multiple angles short distances apart) Generate Gaussian splats (check out something like PolyCam's webpage, they do photogrammetry and have an online gaussian splat generation tool) And then you have a 3d representation of the real world scene that renders at 100+ FPS and looks photorealistic. The biggest downside is the static nature of the scene and I assume difficulty animating similar to voxels. The geometry is composed of 3d ellipsoids (3d gaussians) that also have an alpha blending value. But essentially, you render until you saturate your opacity for the pixel traveling through the gaussian splats from your view point for each pixel. Kind of like ray tracing and volumetric rendering. -- Essentially I stumbled on to Nvidia's NeRF first, which was neat. Also, COLMAP, which they use to generate camera poses from multiple images (structure from motion) is pretty impressive. (NeRF and Gaussian Splatting both use COLMAP to generate the data from images)

But, as I was looking into Gaussian Splatting I was wondering how they took 3d point clouds from COLMAP, made gaussians, and then "corrected" the gaussians using back propagation. That's when I stumbled into differentiable rendering.

Differentiable rendering / Inverse Rendering is essentially software rendering with the property that you can back propagate due to the entire process being invertible and differentiable. So, you know machine learning corrects the weights of a model by using back propagation on the loss function (usually mean squared error or log loss cross entropy) stepping the weights in the negative gradient direction that corrects them the most, using the predicted outputs and intended outputs.

Differentiable rendering does the same, except you have a target image and can correct primitives to that target image. Say you have a bunch of random triangles and a picture of a car from multiple angles. You can correct the positions, colors, and orientation of those triangles to best match the car from all angles. Not sure if that's the best explanation, but it's pretty amazing. If you think about rendering the biggest issue regarding differentiability is the rasterization of triangles to pixels. You fix that then essentially there is something called autodiff, which is a process of keeping track of differentiated equivalents of your original functions as you write code. Which is then used during back propagation in the "backwards" step after the initial forward pass. I don't want to get into the weeds too much, but essentially you can back propagate from a 2d image back to 3d primitives.

Anyway, here are some links about all these things:

YOLO / DepthAnythingV2 Project Page

YOLO / DepthAnythingV2 Project Video

Original PjReddie YOLO page

Ultralytics YOLOv11 Page

DepthAnythingV2 Page

Gaussian Splatting Paper Github

Nvidia NeRF blog page

COLMAP

TinyDiffRast - Differentiable Rendering

Differentiable Rendering interactive example using ellipses

Gaussian Splatting Talk

Robot Arm using Isacc Sim

Nvidia Groot page

I know what you are thinking... this webpage looks horrible, but that's okay, pretty ones usually dont validate ;o)